Methodology – Image Capture Process

The methodology we are using for this project is photogrammetry, where photographs of the building are captured in different formats with the express purpose of generating a 3d model. Photogrammetry relies on an overlap between images to determine how far certain details are from the camera. Typically an high overlap (70% to 80%) will get better results for a cloud of points used to create a surface for the model. Modern software is very capable today of using images for this purpose, but the theory behind this concept has been around since the late 1800’s.

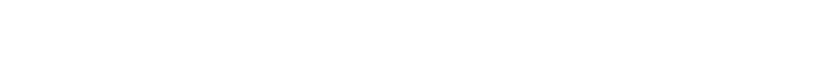

The blue squares are the camera positions during the capture process, drone on the left, ground based on the right.

Our methodology uses two photographic methods for capturing images for this purpose. We use a drone to capture the surrounding landscape and the top of our target buildings. The drone images also capture any details which are only partially seen from the ground or cannot be seen at all. While there are automatic methods of creating a flight path for these images, we also have used manual controls to ensure the details we need have enough photographs from different angles. This technique was only developed through practice, and needed to have images taken and models made as soon as possible to be able to gauge the method was working.

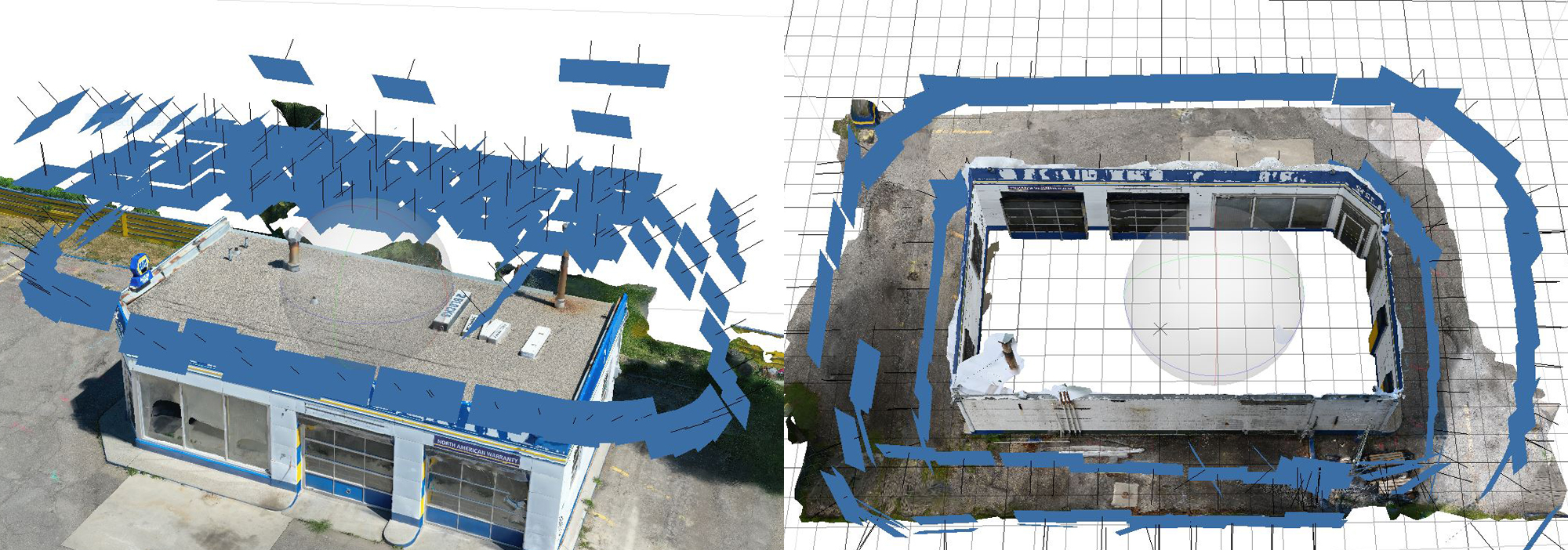

Drone photographs of a vent hood and the model detail

We also take a series of photographs from the ground for these buildings, concentrating on details behind bushes or under awnings. While the software to capture these elements is very capable, the quality of images needs to be quite high. The focus has to be as deep as possible with very little depth of field, and the angle of the object to camera has to be fairly consistent.

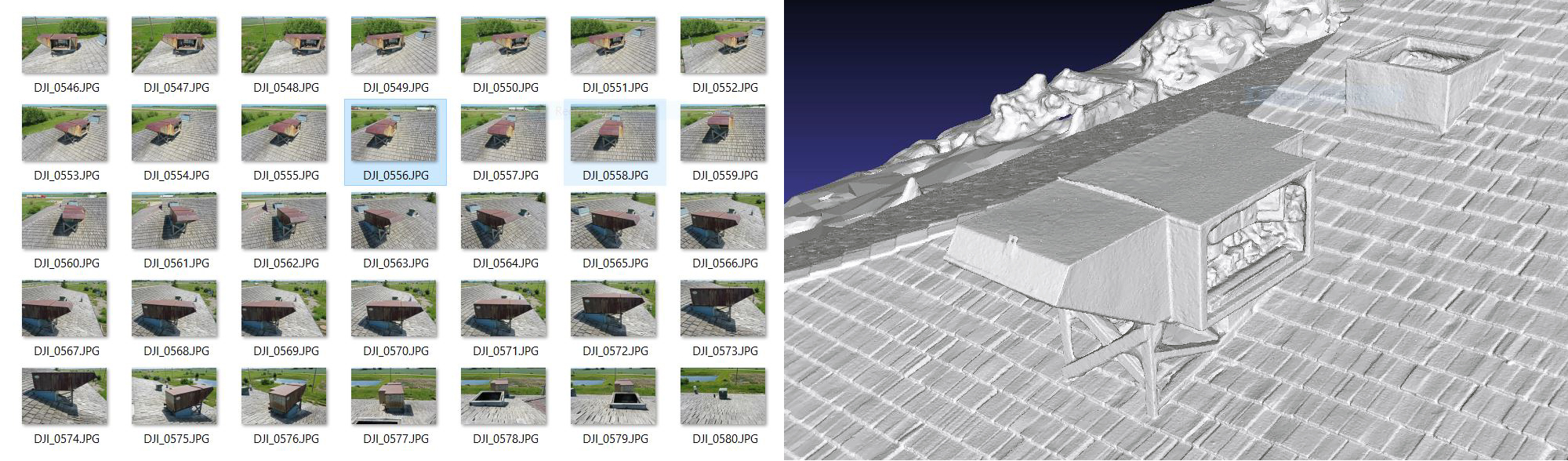

(insert two images of a building detail captured with multiple photographs)

For both of these formats, the best parts of an image to get depth information is at the sides. Where the element being concentrated on is in multiple frames, the sides of the object give the best information of it’s three-dimensionality. If there are not enough images where the sides of the element progress from visible to where the element is in the center of the frame and the sides cannot be seen, then the software will not be able to understand how much it sticks out or pokes into the surrounding surface.

(insert 3 images with a box sticking out visible on both sides)

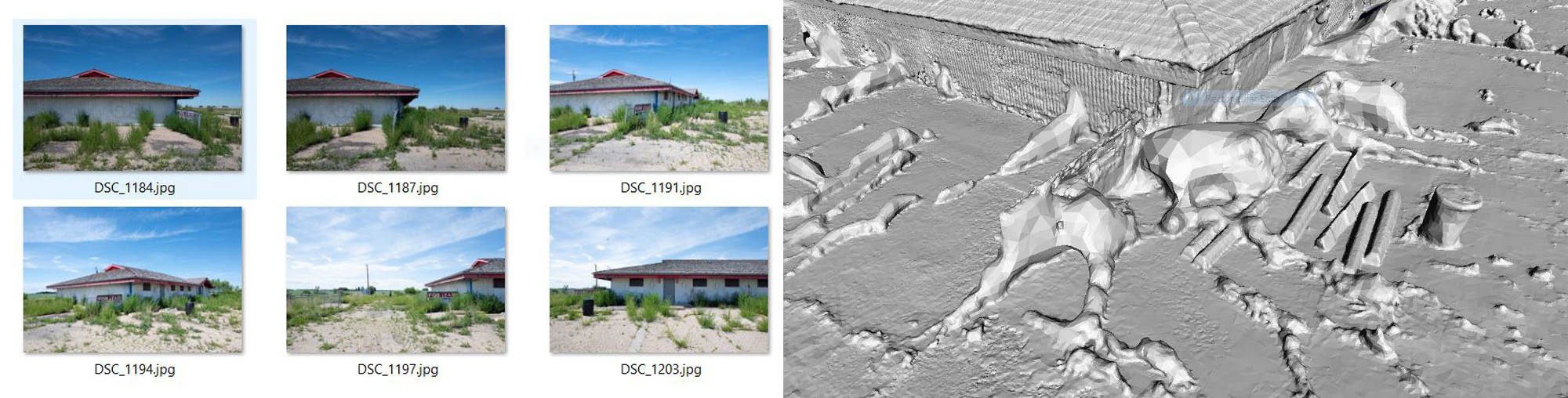

This can be problematic when we concentrate on a building surface without paying attention to things extending out from it, such as pipes and ducts on a roof or hoses/boxes/lights/cameras on the sides of a building. If we were taking photographs to create a 3d model of a person with their arms straight out, not paying attention to the outstretched arms would impact the way hands are rendered in the final model. In this example I have shown six of twenty photos concentrating on the corner of a building, but not the fence, and a render of how the model of the fence turned out.

Six of twenty photos with a fence in the frame and a render of the fence model

The photographs themselves can be captured in different formats, such as jpeg, png or raw files, but their quality (focus, lighting, etc) has to be quite high. The sharper an image is the better. For our purposes the drone captures images as jpegs, and the ground images in raw. The raw format gives up some ability to draw details out of shadows, while possibly getting detail out of bright areas. When there are bright/dark spaces in the same photograph an additional copy may be made to highlight both separately to get the best model. It gets trickier to maintain a balance of lighting with the drone saving as a jpeg. The drone raw files are too large (40Mb each) and do not give the expected range of adjustments as our mirrorless full-frame camera does.

In most cases the photographs do not need much manipulation because of how we take them, but there are times where they have to be adjusted manually, which can impact how long it takes to generate a usable model. Most of the time this is due to shadows so the building model will have the same brightness and detail from all sides.

The image capture process is a relatively small part of the process for generating models of gasoline stations we have found through location scouting. It is the most integral part as without it these these the station models would have to be completely built from scratch. Being on location and gaining permission is a rewarding experience where we learn stories about the people who worked there and the different eras such a simple structure lived through. From when it was the only business on a block or in a town to when it became the shell of someone else’s business dream. A declining gasoline station can live at our periphery but connects us to the distant past.